Ensuring data integrity is crucial, yet data inaccuracies persist.

This article outlines practical techniques to detect and correct data swapping and mislabeling, restoring accuracy.

Learn common causes of errors and their impacts, then apply automated validation rules, statistical methods, and specialized correction protocols to enhance data quality across operations.

Tackling Data Swapping and Mislabeling for Enhanced Data Integrity

Understanding Data Swapping and Mislabeling

Data swapping refers to when data values are inadvertently swapped between records or data points, assigning the wrong data to the wrong record. For example, two customer IDs in a database getting switched.

Mislabeling refers to data being incorrectly categorized or labeled. For instance, a data field for "product type" gets mislabeled as "product name."

Both errors can stem from human mistakes during data entry or extraction, system glitches, flawed data ingestion processes, and more. While subtle, these inaccuracies degrade data quality and integrity.

Identifying the Root Causes of Data Inaccuracies

Common sources of data swapping and mislabeling include:

- Manual data entry errors by analysts or non-technical staff

- Bugs in data pipelines, scripts, or models leading to scrambled data

- Flawed data integration from multiple sources

- Insufficient validation rules and quality checks

- Outdated taxonomies and metadata standards

Often swapping occurs from joining data incorrectly between systems based on similar but inaccurate match keys. Mislabeling frequently arises from outdated schema definitions.

The Ripple Effect on Data Quality and Decision-Making

Data inaccuracies severely impact analytics and business decisions by:

- Producing incorrect data visualizations and misleading insights

- Generating flawed data-driven strategies and forecasts

- Confusing stakeholders and losing trust in data

- Increasing operational and compliance risks from acting on bad data

For example, swapped customer data could lead to inappropriate outreach, while mislabeled product categories could distort sales projections.

Real-World Consequences: Case Studies

-

A retailer struggled with swapped customer data between systems, sending catalogs to the wrong people. This wasted resources and annoyed customers.

-

A hospital mislabeled diagnosis codes when integrating data from multiple clinics. This skewed disease prevalence rates, causing them to miss a growing chronic condition among certain patients.

Careful data management and quality testing is crucial to prevent and catch errors before they propagate further downstream.

Error Detection: Uncovering Data Swapping and Mislabeling

Data swapping and mislabeling can undermine analysis and decision making. Detecting these errors early is key to maintaining data integrity. Here are some recommended techniques:

Manual vs. Automated Error Detection Strategies

Manual inspection of datasets can identify some issues, but has limitations:

- Time-consuming for large datasets

- Easy to miss errors

- Prone to human bias

Automated methods are more efficient and consistent:

- Statistical analysis to detect outliers

- Validation rules to check for anomalies

- Machine learning models to surface patterns

Automation enables continuous monitoring at scale. Manual review is still useful for sample checks. A hybrid approach tends to work best.

Implementing Data Validation Rules

Simple validation rules can catch many errors:

- Data type checks

- Range thresholds

- Pattern matching

- Referential integrity constraints

Rules can be customized for the expected data characteristics. Constraint violations indicate potential data issues for further investigation.

Leveraging Statistical and Machine Learning Techniques

More advanced techniques can uncover complex anomalies:

- Cluster analysis to detect outliers

- Classification models to identify mislabeled cases

- Neural networks to learn normal patterns

These approaches require statistical expertise but can greatly improve detection rates.

Continuous Monitoring for Data Accuracy

Ongoing checks are essential to maintain quality:

- Schedule periodic reviews

- Automate validation rules

- Retrain machine learning models

Continuous monitoring enables early detection before downstream impacts. It also provides feedback to improve upstream processes.

In summary, a layered detection strategy, combining automation and human review, provides efficient and accurate error discovery. The key is developing a data-centric culture focused on quality and integrity.

sbb-itb-ceaa4ed

Corrective Actions: Data Correction and Cleansing Techniques

Data quality is crucial for ensuring accurate analysis and decision making. When issues like data swapping or mislabeling occur, implementing corrective techniques helps restore integrity. Here we examine methods for efficiently detecting and resolving errors.

Manual Correction: A Hands-On Approach

Manually reviewing data entries to identify and fix incorrect values provides precise control, but demands extensive effort as dataset sizes grow. This labor-intensive process involves:

- Methodically scanning all data points across various fields and records.

- Flagging potential data anomalies based on domain knowledge and expected value ranges.

- Verifying and correcting bad values by cross-checking sources or collecting new data.

While robust, manual correction lacks scalability. Automation and statistical approaches address this limitation.

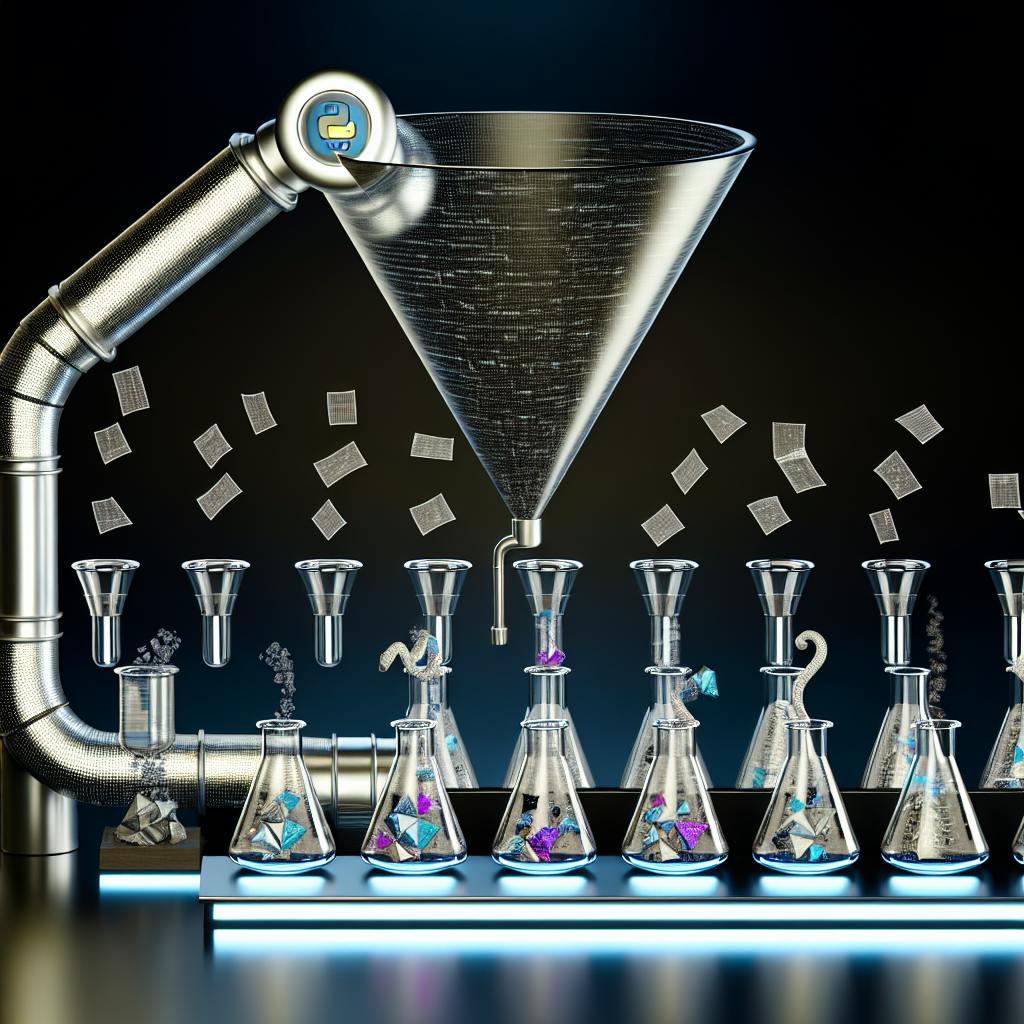

Automating Data Cleansing for Efficiency

Data cleansing software automates the detection and revision of errors, enabling rapid processing of big datasets:

- Customizable rule-based systems standardize formats and flag out-of-range values.

- Pattern recognition identifies improbable data relationships needing verification.

- Machine learning models highlight anomalies for human review.

Intelligently automated cleansing vastly increases throughput while minimizing manual oversight.

Error Correction Techniques Using Statistical Imputation

Statistical imputation infers and replaces missing or abnormal observations by analyzing data distributions:

- Mean/mode substitution populate blanks with average or most common existing values.

- Regression models predict replacements through variable correlations.

- Stochastic approaches randomly draw replacements from distributions.

By mathematically estimating replacements, imputation facilitates efficient correction at scale.

Ensuring Consistency Through Data Preprocessing

Performing quality checks during preprocessing strengthens downstream processes:

- Standardizing formats, definitions, codes, etc. promotes uniformity.

- Establishing data pipelines with validation rules and alerts enhances monitoring.

- Documenting changes encourages accountability and process consistency.

Careful preprocessing is key for sustaining high-quality, usable data.

Maintaining Data Quality: Strategies for Data Management and Integrity

Data quality and integrity are crucial for effective data management. Here are some strategies to help prevent issues like data swapping and mislabeling:

Crafting Robust Data Collection Protocols

- Create detailed guidelines that standardize data collection methods across teams and projects

- Define data types, formats, metadata standards, allowed values, etc. to minimize errors

- Document protocols for data entry, cleaning, labeling, storage, and sharing

Education and Training: Empowering Data Handlers

- Provide training to personnel involved in data collection and handling

- Ensure they understand protocols and importance of accuracy

- Refresh trainings periodically to maintain vigilance

Scheduled Data Audits for Quality Assurance

- Conduct regular spot checks and audits of datasets

- Review for anomalies, outdated info, typos, mislabeled data

- Verify integrity of data pipelines and workflows

Building a Culture of Data Accuracy

- Leadership should champion data quality initiatives

- Foster accountability at all levels for upholding standards

- Encourage transparency around issues to foster continuous improvement

Following robust protocols, delivering education, performing regular audits, and building a culture of quality are key strategies to uphold data integrity. This helps safeguard accuracy and prevent compromised analysis.

Conclusion: Synthesizing Techniques for Data Integrity and Accuracy

Data integrity is crucial for organizations to make informed decisions. Techniques to detect and correct issues like data swapping and mislabeling include:

-

Performing exploratory data analysis to visually identify anomalies and outliers that may indicate errors. Useful methods include summary statistics, histograms, scatter plots.

-

Leveraging validation rules, constraints checks, and data type checks to automatically flag potential data errors. These should be customized to domain knowledge.

-

Data profiling tools can automatically scan for data inconsistencies, invalid values, duplicates. Useful for large datasets.

-

Applying data validation methods like limit checks, reasonableness checks, format checks to ensure values are within expected ranges and formats.

-

Performing periodic statistical analyses like mean/median/mode comparisons to identify variances indicating potential errors.

-

Enabling data traceability via logs, audit trails, version control so errors can be traced back.

-

Correcting errors post-detection via updating incorrect values, removing duplicates/outliers, imputing missing values.

Following best practices around governance, workflows, access controls also helps promote data quality and integrity. Overall a multilayered approach across analysis, validation, and governance is key to detecting and fixing issues.